Photosmyth - A generative AI

25 March, 2024 - 5 min read

Generative AI applications have revolutionized how we approach creativity, problem-solving, and innovation. With advancements in machine learning and deep learning techniques, such applications have become increasingly accessible, allowing developers to test and deploy them relatively quickly.

In this blog post, I'll share my journey of developing a generative AI application exploring the challenges, breakthroughs, and insights gained along the way.

A couple of weeks ago, I developed Photosmyth, a generative AI application using streamlit, capable of rapid on-demand image synthesis.

To develop photosmyth, I used sdxl-turbo, a fast text-to-image model that can synthesize photorealistic images from a text prompt provided by NVidia AI Foundation Models and Endpoints. sdxl-turbo is a state-of-the-art AI modal hosted on NVidia API Catalog which provides easy access to model APIs optimized on the NVIDIA accelerated computing stack, making it fast and easy to evaluate.

NVidia AI models

I used NVidia's open source connector - NVIDIA AI Foundation Endpoints which integrates with longchain. This connector provides easy access to NVIDIA-hosted models and supports chat, embedding, code generation, steerLM, multimodal, and RAG.

Setup -

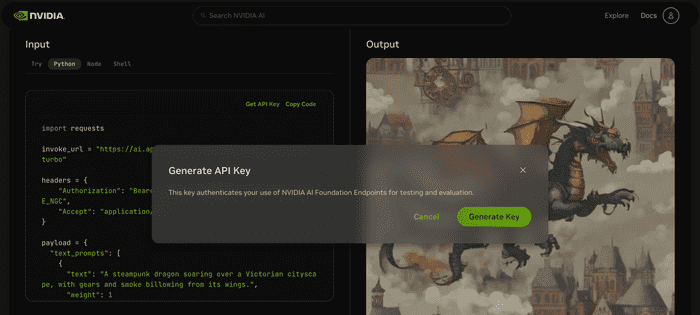

- The first step involves creating a free NGC account

- Navigate to the desired model page that you want to use (

sdxl-turboin my case) - Click

Get API keyin the Input section under the language you are using - Save the key as this is our

NVIDIA_API_KEY

You need to export the key in your shell to access the model.

export NVIDIA_API_KEY='...'I have observed that the same key works for all the models in the NVidia API Catalog.

Running the code

The code for photosmyth is available on GitHub.

To run the code, follow these simple steps -

Clone the repository

git clone https://github.com/itsiprikshit/photosmyth.gitCreate a virtual environment

python3 -m virtualenv venvActivate the virtual environment

source venv/bin/activateInstall the required dependencies

pip install -r requirements.txtStart the streamlit app

streamlit run app.py --server.port 8012

Navigate to http://localhost:8012 on your browser.

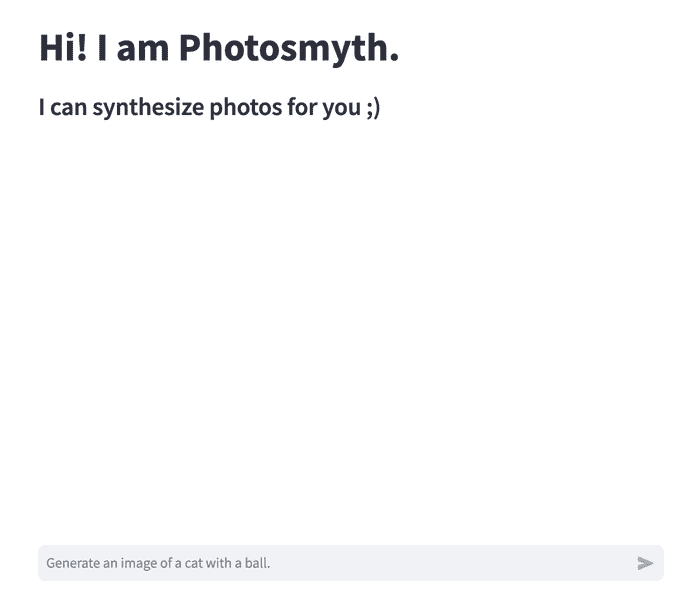

You've got your personal AI, Photosmyth, that generates images on demand. It looks like this -

You can type your prompt into the chat box, and the AI will generate images for you.

Let's dive into code

Let us walk through several code snippets outlining the implementation of Photosmyth.

First, I import the langchain_nvidia_ai_endpoints package and instantiate the model using ChatNVIDIA class provided by langchain that connects to the NVidia package.

from langchain_nvidia_ai_endpoints import ChatNVIDIA

def initialize():

llm = ChatNVIDIA(model="ai-sdxl-turbo")

llm.client.payload_fn = create_payload

chain = llm | base64_to_img

return chainsdxl-turbo has strong parameter expectations that langchain does not support by default. Therefore, lagchain allows us to force the payload of the underlying client by using payload_fn function, where I created the payload, which was eventually passed to the model.

def create_payload(data):

payload = {'text_prompts': []}

if 'messages' in data:

for message in data['messages']:

p = {'text': message['content']}

payload['text_prompts'].append(p)

return payloadAfter instantiating the model, I chain the llm instance with base64_to_img that decodes the base64 image returned by the model.

def base64_to_img(data):

artifacts = data.response_metadata['artifacts']

img = artifacts[0]['base64']

return BytesIO(base64.b64decode(img))Finally, to invode the chain, I just used the invoke method and passed the user input as a string.

img = chain.invoke(user_input)The returned image is visualized as the model response in the UI.

To create the chat component, I used streamlit.

Maintaining Context

After putting all the pieces together, I could interact with the AI and the AI-generated images based on my input. However, it considered every input as an independent query. I realized that I wasn't saving the conversation context, so I tweaked it to save the context.

context = ''

for i in range(len(st.session_state.messages)):

message = st.session_state.messages[i]

if message['role'] == 'user':

context += message['content']I now provide the entire conversation context to the model on every query. Finally, the images its generating now are based on the conversation context. I am aware that I am using a very naive method of providing context to the model by sending the entire conversation in the query.

I plan to enhance the capabilities of my AI by incorporating more sophisticated methods for contextual understanding. I could summarise the conversation using Natural Language Toolkit (NTLK) or spaCY and provide the summary of the conversation as context to the model. I'm also considering implementing a feature where users can upload relevant documents and by utilizing a RAG (Retrieval-Augmented Generation) pipeline, I can extract context from these documents to enrich the model's understanding and provide more meaningful responses.

Conclusion

Reflecting on my journey with Photosmyth, I'm truly amazed by the power of generative AI. It's been an exciting adventure exploring how AI can unleash creativity in such a simple yet profound way. By leveraging NVIDIA's advanced AI models, Photosmyth demonstrates the remarkable potential of generative AI in rapidly synthesizing photorealistic images from text prompts. This journey has been enlightening, and I'm eager to see how Photosmyth continues to inspire and evolve.

I hope you enjoyed reading my experience.

Connect with me on LinkedIn!

Hi, I am Prikshit Tekta. I am a full-stack software engineer.